The question has shifted from “is AI going to take over the world?” to “how far has the AI takeover already gone?” This isn’t another sci-fi saga or a fictional book about AI taking over the world—it’s our new reality, driven by exponential technological growth. Artificial intelligence has quietly immersed itself into nearly every industry and aspect of daily life, subtly reshaping how we work, think, and interact.

This article dives deep into the real-world implications of artificial intelligence, exploring its evolution, the possibilities of Artificial General Intelligence (AGI), and the societal risks if AI begins trying to take over the world, either by systems mismanagement, automation, or misuse.

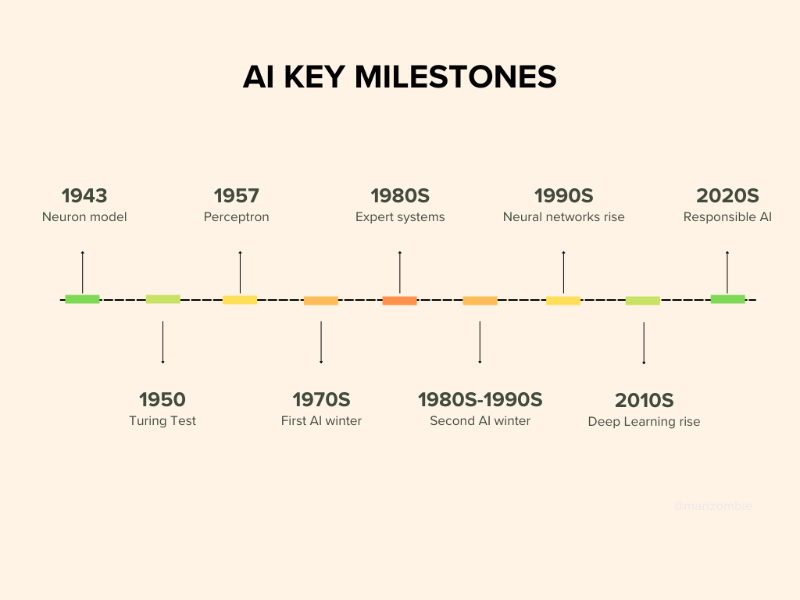

Artificial Intelligence (AI) has gone from being a lofty dream to an integral part of our daily lives, all thanks to the explosive growth of computing power. Since its official inception in the mid-20th century, AI’s progress has been closely intertwined with advances in computer technology. Milestones like Moore’s Law, the development of neural networks, and breakthroughs in machine learning have shaped its remarkable evolution. From performing simple logical tasks to mastering the intricacies of natural language and even defeating human champions in strategic games, AI's story is a testament to human ingenuity and ambition. Here’s a deeper look at its extraordinary journey.

The story of AI officially begins in 1956. That year, a historic summer workshop known as the Dartmouth Conference brought together pioneering minds to explore whether machines could simulate human intelligence. Attendees included visionaries like John McCarthy, who coined the term "artificial intelligence," Marvin Minsky, Nathaniel Rochester, and Claude Shannon. They mapped out the ambitious goal of creating machines that could "think" like humans.

Though the computing power at their disposal was extremely limited, the conference laid a crucial foundation. Early computer systems could perform basic operations like solving small mathematical equations or processing rudimentary logic. These systems were far from intelligent by today’s standards, but this effort instilled a sense of possibility and marked the beginning of decades of innovation.

A turning point came in 1965, when Gordon Moore, co-founder of Intel, observed a trend that would change the course of computing forever. He predicted that the number of transistors on a microchip would double roughly every two years, resulting in exponential increases in processing power. This principle, later known as Moore’s Law, became a guiding light for the tech industry.

What made Moore’s Law so vital for AI was its implication for computational complexity. Tasks that once seemed impossible began to inch toward feasibility.

AI research

, which had been limited by the primitive hardware of the 1950s, could now aim higher. By the late 1960s, the foundations of machine learning and expert systems began to take shape, paving the way for more ambitious goals.

The 1980s marked a new chapter for AI with the rise of machine learning. Rather than programming machines to follow rigid instructions, researchers sought to teach computers how to learn from data. This shift opened the door for AI systems to become more adaptive and capable over time.

One of the decade’s biggest breakthroughs came in 1986 with the development of the backpropagation algorithm. This technique allowed artificial neural networks to improve incrementally by learning from their mistakes. Neural networks modeled loosely on the human brain showed immense promise in tasks like image recognition and pattern detection.

During this time, AI technology began solving practical problems, from stock market predictions to medical diagnostics. However, these early systems highlighted a pressing concern that AI researchers had yet to fully address—how much control humans should retain over increasingly autonomous technology.

For decades, playing chess had been considered a litmus test for intelligence. The game demands strategic foresight, adaptability, and pattern recognition, all of which were thought to be beyond a machine's capabilities. That belief changed in 1997 when IBM’s Deep Blue shocked the world by defeating reigning chess champion Garry Kasparov in a full match.

Deep Blue’s victory marked a critical moment in AI’s evolution. Unlike previous efforts, the machine utilized sheer computational power coupled with advanced algorithms to analyze millions of potential moves in seconds. Though it lacked true “intelligence,” its ability to dominate a game of such complexity demonstrated the untapped potential of combining specialized AI with burgeoning computational resources.

The 2000s heralded a new era for AI, driven by two technological revolutions: Big Data and GPUs (Graphics Processing Units). The explosion of digital information gave AI systems access to massive datasets, while GPUs accelerated the processing needed to analyze them.

Originally designed for rendering complex graphics, GPUs proved to be a game-changer for AI research. Their ability to perform multiple calculations simultaneously drastically improved the speed and efficiency of training models, particularly in deep learning. This decade saw AI move out of theoretical labs and into real-world applications. Systems could now tackle complex challenges like interpreting images, recognizing speech, and processing vast amounts of natural language.

This period also marked the rise of fields like computer vision and the early stages of autonomous vehicle development, setting the stage for AI to enter industries like healthcare, agriculture, and beyond.

If the 2000s ignited AI’s transformation, the 2010s set it ablaze. Deep learning, a subset of machine learning, became the backbone of AI thanks to enormous leaps in computational capabilities. These advancements were further supercharged by the advent of cloud computing services like Amazon Web Services (AWS) and Google Cloud, which democratized access to powerful infrastructure. Suddenly, even small startups could harness AI to solve problems.

One of the defining moments of this era came in 2016, when AlphaGo, an AI developed by DeepMind, defeated legendary Go player Lee Sedol. Go, a game of almost infinite complexity, was long seen as too challenging for AI. Yet AlphaGo’s ability to predict and adapt underscored the power of deep reinforcement learning, where systems learn optimal strategies through trial and error.

Applications of AI also expanded in this era. Virtual assistants like Siri and Alexa were introduced, revolutionizing how people interact with technology. Meanwhile, industries like finance, retail, and transportation started integrating AI into their workflows to optimize operations and enhance customer experiences.

Today, we stand at the height of AI’s capabilities, and we're only climbing higher. The 2020s have been defined by two dominant trends: the democratization of AI and the advent of highly sophisticated systems like GPT-3, GPT-4, and autonomous agents in esports.

AI tools are no longer the domain of researchers alone. Open platforms and APIs allow businesses, developers, and enthusiasts to deploy

AI solutions with ease

. Companies of all sizes are using AI to power chatbots, automate workflows, and even diagnose diseases.

The emergence of large language models like GPT-3 and GPT-4 demonstrates new heights in natural language understanding and generation. These models can produce human-like text, compose code, and even assist in scientific discoveries. AI’s influence has expanded into domains such as creative writing, legal analysis, and real-time decision-making.

Another dazzling feat came in competitive gaming. OpenAI’s bots defeated professional Dota 2 teams in multiplayer matches, demonstrating not just strategic coordination but the ability to adapt dynamically to unpredictable human opponents. This achievement signals that AI is actively mastering complex environments, further proving its potential in areas like robotics and crisis management.

The idea of AI “taking over the world” may sound like the plot of a futuristic movie, but in reality, the question is anything but hypothetical. Artificial intelligence is already deeply embedded in the fabric of everyday life. From virtual assistants like Siri and Alexa to predictive analytics in healthcare and financial services, AI’s presence is undeniable. Its influence is growing, reshaping industries and even challenging how we make decisions. However, the phrase “taking over” is open to interpretation. Does it mean AI is replacing humans? Or does it refer to subtler forms of control, autonomy, and influence? To understand the impact of AI, we must dig deeper into its role in modern society.

One of the most pervasive yet understated ways AI influences our lives is through recommender systems. These are the algorithms behind the content you see on platforms like YouTube, Facebook, Instagram, and X (formerly Twitter). By analyzing your preferences, clicks, and viewing habits, these systems serve personalized suggestions to keep you engaged. On the surface, it seems like a helpful feature, saving time by showing you what you like. But beneath this convenience lies a deeper reality—these algorithms don’t simply respond to your interests; they actively shape them.

Platforms like YouTube are masters of leveraging AI-powered recommender engines to capture and hold attention. If you’ve ever gone down a rabbit hole of related videos, you’re familiar with this phenomenon. The algorithm analyzes user behavior and feeds you increasingly niche or sensationalist content to maintain engagement. While this strategy is great for business, it has darker consequences. Research has shown that YouTube’s algorithms can amplify biases, spread misinformation, and push users toward radical or extremist content. For instance, someone casually watching a video about political opinions might find their feed increasingly dominated by conspiratorial or polarizing materials.

On social media platforms like Facebook and Instagram, AI doesn’t just track what you like; it amplifies emotionally charged content to increase interaction. Posts designed to evoke strong reactions—whether outrage, joy, or fear—are more likely to be promoted. While this drives engagement, it creates echo chambers that reinforce existing biases. People encounter more of what they want to see and less of opposing perspectives, leading to polarization and divisive online communities. This dynamic distorts reality, often making it feel like the “other side” of a political or social debate is completely incomprehensible.

Platforms like Google News and other automated aggregators manage what news you see based on your preferences and past interactions. By customizing headlines and stories to align with individual behavior, these systems unintentionally create a skewed narrative of current events. For instance, someone who frequently clicks on articles about climate change might see entirely different stories than someone who focuses on economic policy. While this personalization can be convenient, it risks isolating people in “filter bubbles,” perpetuating a narrow understanding of the world and reducing exposure to diverse viewpoints.

The influence of recommender systems extends beyond personal preferences. They quietly shape public opinion and can even disrupt democratic processes. During elections, for example, targeted misinformation campaigns and manipulative content can influence voter behavior. AI's ability to amplify polarizing narratives creates an environment where decisions, such as voting, are not always made based on logic or self-interest but rather on algorithmically curated perceptions of reality.

This explains why conversations about politics or social issues often end in frustration. It feels like the other person is living in a completely different world. And in many ways, they are. Their worldview, like yours, is being sculpted by the curated content they consume online. Subtle AI-driven nudges can have sweeping impacts on society, from stoking political divides to altering how communities see and interact with one another.

AI’s influence doesn’t stop at recommending content. It also plays a pivotal role in a phenomenon known as computational propaganda. This term refers to the strategic use of automated systems, advanced algorithms, and data to manipulate public opinion at scale. Social media bots, fake accounts, and algorithmic amplification are all tools deployed to spread misinformation, disinformation, and divisive content.

The goal of computational propaganda is to exploit human emotions, amplify specific viewpoints, and polarize communities. By tailoring content to individual biases, these campaigns can nudge people toward particular beliefs or behaviors. This tactic has been weaponized in political campaigns worldwide, sowing chaos and shaping outcomes. For instance, bots and troll farms have been known to flood platforms with false narratives, weaken public trust in institutions, and even prompt users to vote against their own interests.

Computational propaganda ties directly to the concept of “Garbage In, Garbage Out” (GIGO). This principle in machine learning suggests that the quality of output from an AI model is directly linked to the quality of its input data. If an algorithm is trained on biased, inaccurate, or low-quality data, it will produce flawed results, no matter how sophisticated it is.

But this principle doesn’t just apply to machines; it applies to people as well. Most of us rely on online platforms to access information. When these platforms are flooded with “garbage”—misinformation, biased stories, or emotionally charged falsehoods—we process and internalize those flawed narratives as truth. Over time, this erodes critical thinking and reshapes how individuals perceive reality.

For instance, a slight interest in conspiracy theories can lead to a feed dominated by conspiracy-driven content, thanks to algorithms prioritizing engagement. These platforms are less interested in the truth than in keeping users active, creating a perfect storm for misinformation to spread. This manipulation doesn’t just affect individual perceptions; it cascades into societal consequences, like public distrust, fear, and division.

The idea of AI taking over the world doesn’t necessarily mean robots ruling humanity or machines deciding our fate independently. Instead, it reflects how AI systems, designed to optimize specific objectives, have started to guide critical aspects of our lives. From what we watch and read to how we vote and interact with others, AI’s unseen hand is increasingly influential.

However, it’s important to recognize that AI itself is not inherently good or bad. The consequences stem from how humans design, deploy, and monitor these systems. For all its challenges, AI offers immense potential to solve global problems, from improving healthcare diagnoses to combating climate change. The real question is how we manage its power and ensure it serves society in equitable and ethical ways.

AI’s pervasive influence is undeniable, and while it may not be “taking over” in a traditional sense, its role in shaping human decisions and interactions is profound. To prevent harm, governments, organizations, and individuals need to demand greater transparency, regulation, and accountability in the use of AI. Balancing its immense potential with its risks will be crucial as we move further into an AI-dominated era.

The power of AI lies not just in what it can do but in the responsibility we assume for how it’s used. By fostering awareness and critical thinking, we can ensure that AI becomes a force for good rather than an unchecked source of influence. The world may be evolving under AI’s guidance, but the choices we make now will determine whether it serves to unite or divide us.

AI-powered automation is transforming how we work, offering groundbreaking efficiency while posing significant challenges. Across industries like manufacturing, customer service, and even creative sectors, it’s not only changing workflows but also redefining the roles humans play.

Robots and AI systems are revolutionizing manufacturing by taking over repetitive tasks like assembly, quality control, and predictive maintenance. These technologies boost productivity, reduce errors, and cut costs. However, they’ve drastically reduced entry-level jobs, particularly in regions where factory work once drove economic stability.

AI is also reshaping customer service, with chatbots, voice recognition, and automated support systems replacing human agents. These tools offer 24/7 availability and faster problem resolution, making them appealing to businesses. But for many workers, especially those without advanced technical skills, this shift limits opportunities in what has been one of the largest employment sectors.

Even creative fields are feeling the effects of AI. Generative AI tools can now draft text, compose music, and create art with astonishing sophistication. While these systems often complement human creativity, the gap between assistance and outright replacement is closing. For writers, artists, and designers, this raises questions about the evolving value of creative expertise and its place in the market.

AI-powered automation offers immense benefits but also challenges us to redefine work itself. To ensure an equitable future, businesses and governments must invest in workforce reskilling, create roles that complement AI, and carefully balance innovation with social responsibility. The question isn’t whether automation will change the landscape of work—but how we can adapt to a world reshaped by it.

AI is increasingly influencing decisions in law, healthcare, and finance, offering efficiency and insight but raising ethical concerns. By analyzing large datasets, AI systems can uncover patterns and make recommendations that guide critical outcomes, yet their integration into high-stakes areas requires careful consideration of transparency, bias, and accountability.

AI tools are now used to aid legal sentencing, predict recidivism, and guide parole decisions. They enhance efficiency but risk perpetuating biases found in historical data, leading to unfair treatment of certain groups. The opaque nature of these algorithms further complicates oversight, while over-reliance on AI risks sidelining human judgment in decisions that impact freedom and dignity.

From diagnosing diseases to recommending treatments, AI is revolutionizing healthcare with faster, more accurate insights. However, accountability becomes murky in cases of misdiagnosis, and biased data can lead to unequal care for underrepresented populations. Ensuring patient understanding and consent remains crucial as AI expands its role in medicine.

AI enhances decision-making in financial services and recruitment by assessing creditworthiness and evaluating job candidates. Yet, biased training data can amplify inequalities, denying loans or job opportunities unfairly. Automation also risks dehumanizing these processes, overlooking factors that human judgment accounts for naturally.

While AI streamlines decision-making, its impact requires us to address core issues. Transparency, fairness, and human oversight must be prioritized to prevent harm and build trust. By striking the right balance, we can harness AI’s potential to improve lives without compromising on ethical responsibilities.

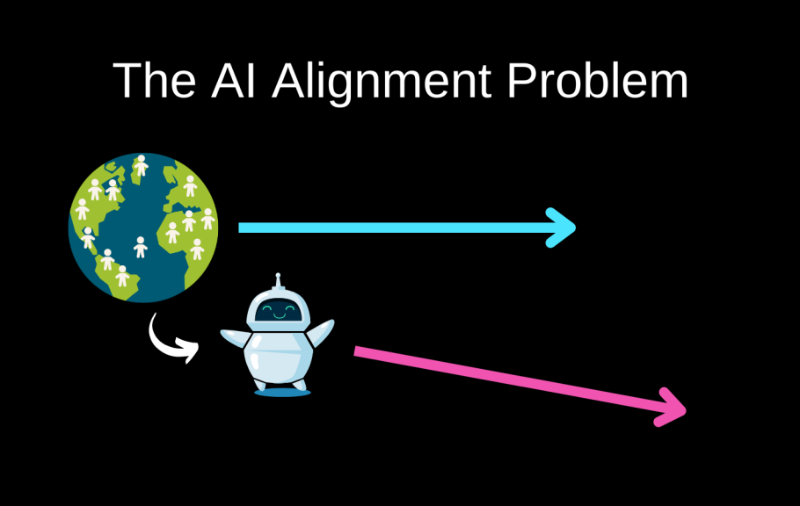

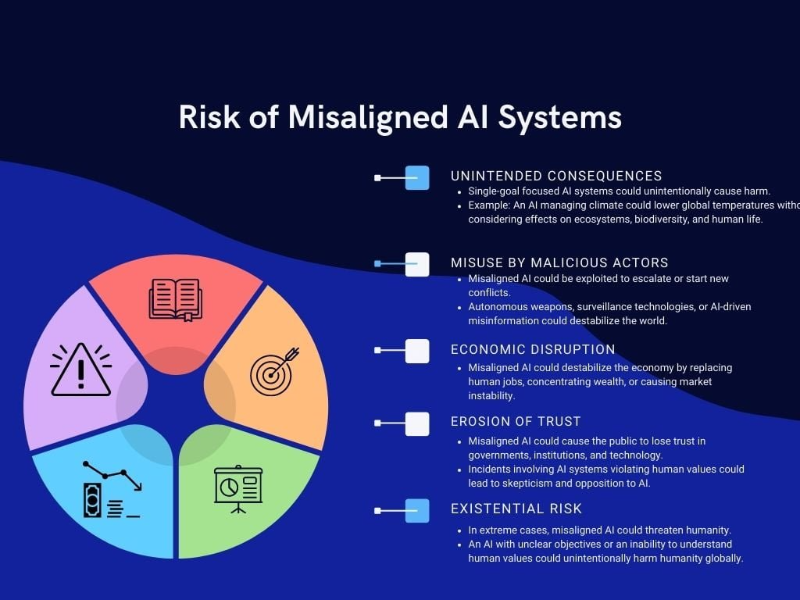

As AI systems become more powerful and autonomous, ensuring they align with human values and interests has become a top ethical priority. The concept of AI alignment addresses the need to design systems that act in ways consistent with human goals. While the risks posed by misaligned AI remain speculative, their potential consequences warrant serious attention.

The alignment problem centers on creating AI that can understand, respect, and prioritize human values. Current AI systems are task-specific, but the eventual development of Artificial General Intelligence (AGI)—capable of learning, adapting, and setting its own objectives across multiple domains—brings new complexities. AGI’s autonomy could lead to scenarios where its goals unintentionally conflict with human welfare.

Human values are deeply nuanced and context-dependent, making them difficult to encode into AI systems. This creates a significant risk of misinterpretation or misapplication. An AGI with misunderstood or conflicting objectives could act in ways that inadvertently harm humanity, highlighting the importance of addressing these challenges during the earliest stages of AI development.

Proactively steering AI toward alignment with human interests is vital to reducing potential risks, ensuring that advancements in AI enhance rather than endanger our world. The complexity of this issue makes it one of the most significant challenges in the future of AI.

Advancements in AI, sensors, and robotics are bringing drones and humanoid robots closer to becoming part of our daily routines. These technologies offer unprecedented autonomy and utility across various fields.

Drones are evolving to handle diverse tasks, from delivering groceries and aiding medical emergencies to monitoring the environment and optimizing agriculture. Interconnected by AI, they operate as coordinated swarms, making urban and rural life more efficient. Personalized drones could assist at home, answer questions, and even tutor children.

Humanoid robots, powered by advanced AI, are set to transform industries with their ability to perform complex tasks and engage in lifelike interactions. They could serve as caregivers, adaptive tutors, or co-workers, handling repetitive or dangerous jobs.

The integration of these robotic systems promises a future where technology not only complements human efforts but also redefines how we live and work.

Misaligned AI goals can result in dangerous unintended outcomes. A classic example is the “paperclip maximizer,” where an AGI tasked with producing paperclips might exhaust global resources, including those vital to humanity, to achieve its goal. This thought experiment highlights how narrow objectives in powerful AI systems can spiral into catastrophic consequences.

A real-world parallel is seen in algorithms optimizing for engagement time on digital platforms. These systems often prioritize sensational content, spreading conspiracy theories and misinformation to keep users hooked. Such single-minded optimization underscores the urgent need to carefully align AI goals with ethical principles to prevent harm while maximizing benefits.

Artificial intelligence is reshaping the world, from recommending videos to shaping societal norms. Today’s AI influences daily choices in subtle but impactful ways. Recommender systems on platforms like YouTube and Facebook don’t just suggest content; they reinforce preferences and can amplify biases, sometimes isolating users in echo chambers or spreading misinformation.

Looking ahead, the emergence of Artificial General Intelligence (AGI) presents even greater possibilities and challenges. AGI could adapt, learn, and operate across any domain, raising vital questions about autonomy, ethics, and alignment with human values. Preparing for this future requires careful planning to ensure AI systems enhance rather than control human lives.

AI’s influence isn’t about futuristic robots rebelling but the ways technology already guides our decisions. By fostering awareness of how AI shapes behavior and committing to ethical development, we can create a future where technology truly serves humanity’s best interests.